Set up Amplify Storage

Prerequisite: Install and configure the Amplify CLI

Storage with Amplify

AWS Amplify Storage module provides a simple mechanism for managing user content for your app in public, protected or private storage buckets. The Storage category comes with built-in support for Amazon S3.

There are two ways to add storage with Amplify - manual and automated. Both methods require the auth category with Amazon Cognito to also be enabled. If you are creating an S3 bucket from scratch, you should use the Automated Setup. However if you are reusing existing Cognito and S3 resources, you should opt for Manual Setup.

Automated Setup: Create storage bucket

To start from scratch, run the following command from the root of your project:

amplify add storageand select Content in prompted options:

? Please select from one of the below mentioned services (Use arrow keys)❯ Content (Images, audio, video, etc.) NoSQL DatabaseThe CLI will walk you though the options to enable Auth, if not enabled previously, and name your S3 bucket. To update your backend run:

amplify pushWhen your backend is successfully updated, your new configuration file aws-exports.js is copied under your source directory, e.g. '/src'.

Configure your application

Add Amplify to your app with yarn or npm:

npm install aws-amplify@^5Import and load the configuration file in your app. It's recommended you add the Amplify configuration step to your app's root entry point. For example, App.js (Expo) or index.js (React Native CLI).

import { Amplify, Storage } from 'aws-amplify';import awsconfig from './aws-exports';Amplify.configure(awsconfig);Manual Setup: Import storage bucket

To configure Storage manually, you will have to configure Amplify Auth category as well. In other words, you will not be importing the autogenerated aws-exports.js - instead, you will be adding your own existing Amazon Cognito and Amazon S3 credentials in your app like this:

import { Amplify, Auth, Storage } from 'aws-amplify';

Amplify.configure({ Auth: { identityPoolId: 'XX-XXXX-X:XXXXXXXX-XXXX-1234-abcd-1234567890ab', //REQUIRED - Amazon Cognito Identity Pool ID region: 'XX-XXXX-X', // REQUIRED - Amazon Cognito Region userPoolId: 'XX-XXXX-X_abcd1234', //OPTIONAL - Amazon Cognito User Pool ID userPoolWebClientId: 'XX-XXXX-X_abcd1234' //OPTIONAL - Amazon Cognito Web Client ID }, Storage: { AWSS3: { bucket: '', //REQUIRED - Amazon S3 bucket name region: 'XX-XXXX-X', //OPTIONAL - Amazon service region isObjectLockEnabled: true //OPTIONAl - Object Lock parameter } }});You can configure the S3 Object Lock parameter of the bucket using the isObjectLockEnabled configuration field. By default, isObjectLockEnabled is set to false. If you want to perform a put operation against a bucket with Object Lock enabled through the console you must first set isObjectLockEnabled to true.

Using Amazon S3

If you set up your Cognito resources manually, the roles will need to be given permission to access the S3 bucket.

There are two roles created by Cognito: an Auth_Role that grants signed-in-user-level bucket access and an Unauth_Role that allows unauthenticated access to resources. Attach the corresponding policies to each role for proper S3 access. Replace {enter bucket name} with the correct S3 bucket.

Inline policy for the Auth_Role:

{ "Version": "2012-10-17", "Statement": [ { "Action": ["s3:GetObject", "s3:PutObject", "s3:DeleteObject"], "Resource": [ "arn:aws:s3:::{enter bucket name}/public/*", "arn:aws:s3:::{enter bucket name}/protected/${cognito-identity.amazonaws.com:sub}/*", "arn:aws:s3:::{enter bucket name}/private/${cognito-identity.amazonaws.com:sub}/*" ], "Effect": "Allow" }, { "Action": ["s3:PutObject"], "Resource": ["arn:aws:s3:::{enter bucket name}/uploads/*"], "Effect": "Allow" }, { "Action": ["s3:GetObject"], "Resource": ["arn:aws:s3:::{enter bucket name}/protected/*"], "Effect": "Allow" }, { "Condition": { "StringLike": { "s3:prefix": [ "public/", "public/*", "protected/", "protected/*", "private/${cognito-identity.amazonaws.com:sub}/", "private/${cognito-identity.amazonaws.com:sub}/*" ] } }, "Action": ["s3:ListBucket"], "Resource": ["arn:aws:s3:::{enter bucket name}"], "Effect": "Allow" } ]}Inline policy for the Unauth_Role:

{ "Version": "2012-10-17", "Statement": [ { "Action": ["s3:GetObject", "s3:PutObject", "s3:DeleteObject"], "Resource": ["arn:aws:s3:::{enter bucket name}/public/*"], "Effect": "Allow" }, { "Action": ["s3:PutObject"], "Resource": ["arn:aws:s3:::{enter bucket name}/uploads/*"], "Effect": "Allow" }, { "Action": ["s3:GetObject"], "Resource": ["arn:aws:s3:::{enter bucket name}/protected/*"], "Effect": "Allow" }, { "Condition": { "StringLike": { "s3:prefix": ["public/", "public/*", "protected/", "protected/*"] } }, "Action": ["s3:ListBucket"], "Resource": ["arn:aws:s3:::{enter bucket name}"], "Effect": "Allow" } ]}The policy template that Amplify CLI uses is found here.

Amazon S3 Bucket CORS Policy Setup

The following steps will set up your CORS Policy:

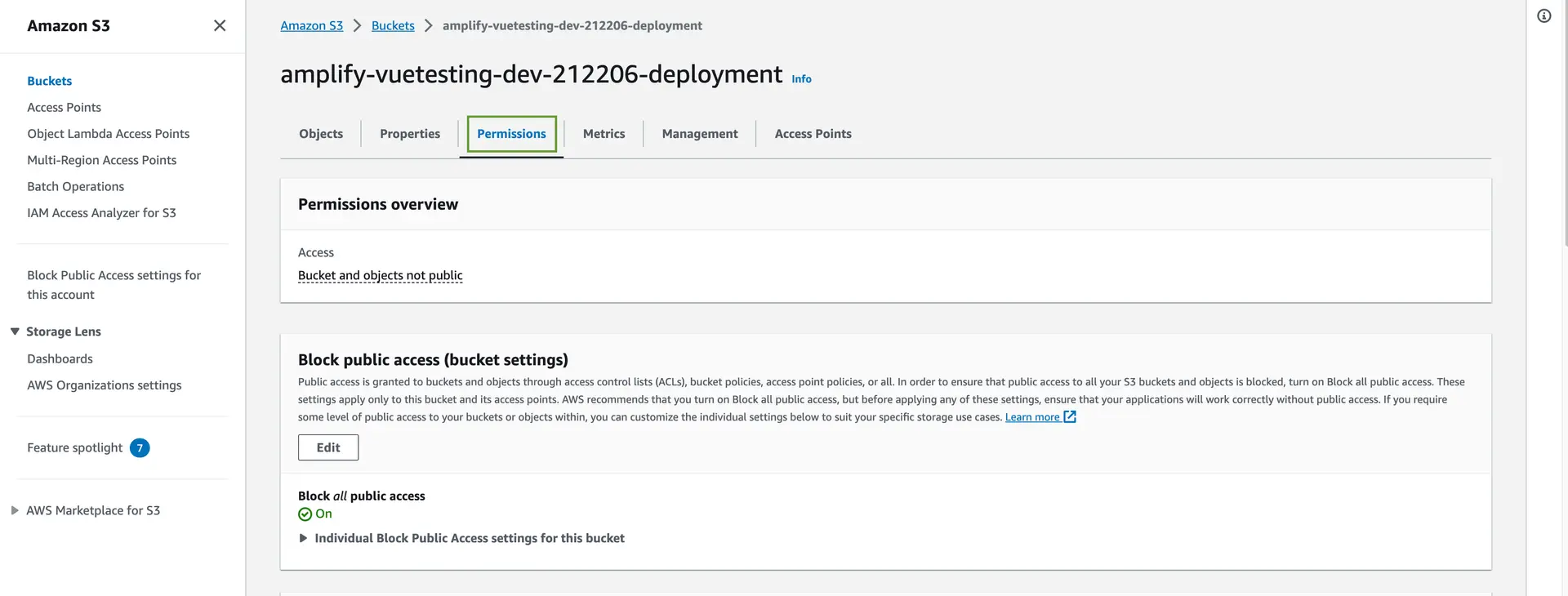

- Go to Amazon S3 Console and click on your project's

userfilesbucket, which is normally named as [Bucket Name][Id]-dev. - Click on the Permissions tab for your bucket.

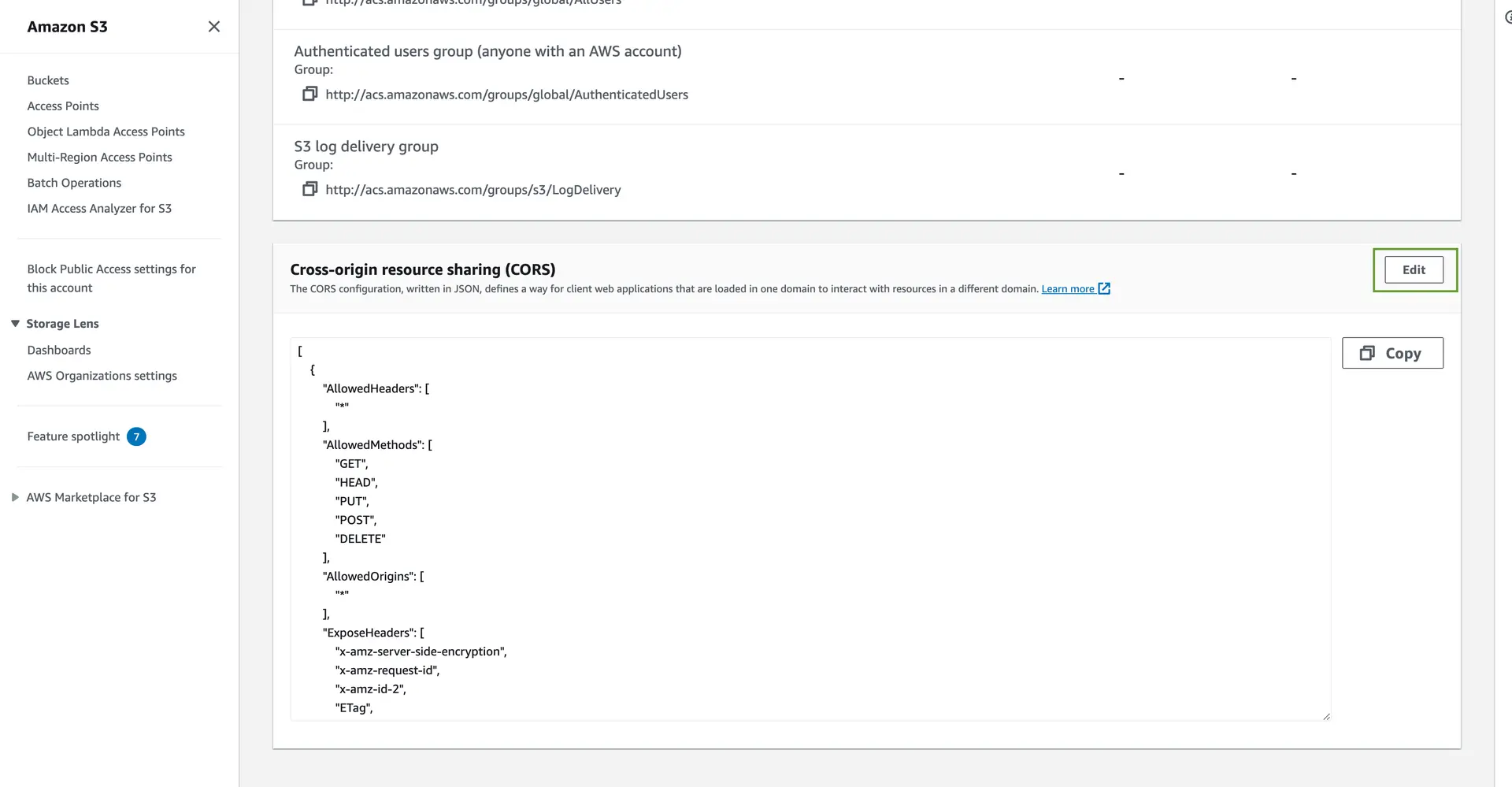

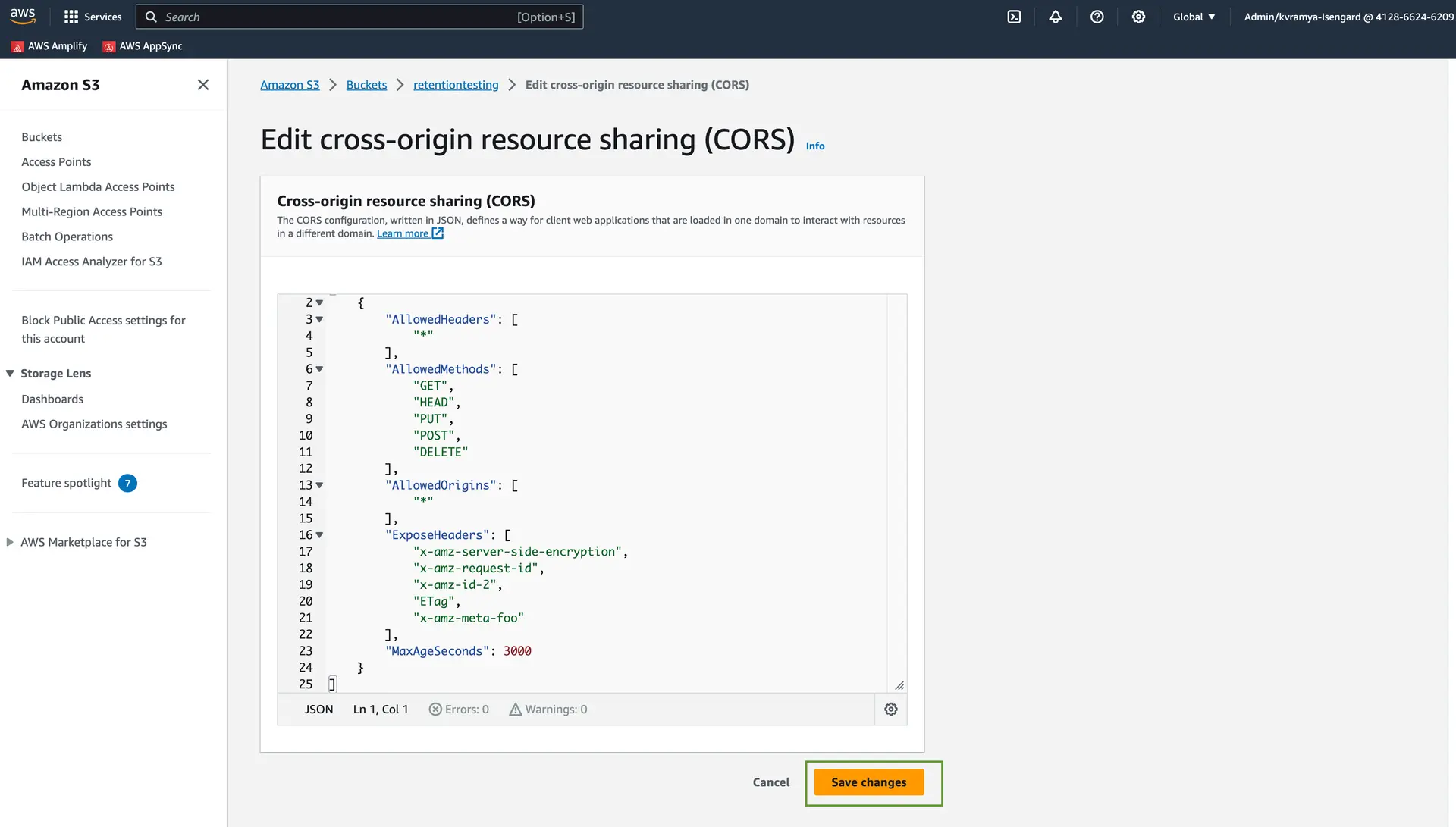

- Click the edit button in the Cross-origin resource sharing (CORS) section.

- Make the Changes and click on Save Changes. You can add required metadata to be exposed in

ExposeHeaderswithx-amz-meta-XXXXformat.

[ { "AllowedHeaders": ["*"], "AllowedMethods": ["GET", "HEAD", "PUT", "POST", "DELETE"], "AllowedOrigins": ["*"], "ExposeHeaders": [ "x-amz-server-side-encryption", "x-amz-request-id", "x-amz-id-2", "ETag", "x-amz-meta-foo" ], "MaxAgeSeconds": 3000 }]For information on Amazon S3 file access levels, please see file access levels.

Mocking and Local Testing with Amplify CLI

Amplify CLI supports running a local mock server for testing your application with Amazon S3. Please see the CLI toolchain documentation for more details.

![Go to [Amazon S3 Console]](/images/storage/nextImageExportOptimizer/CORS1-opt-1920.WEBP)